Lately I’ve been exploring local AI video generation. This has been an on again off again type of interest of mine. I’ve done significant research on it, I’ve made lots of tools centered around it, and I’m constantly exploring new models and tools. I’ve spent years working with AI video in months long spurts, then bouncing to other special interests. In any case, I’m back to AI video using another new tool, Frame Pack. Frame Pack is kind of unique and innovative. It generates the last frame first then works backwards. It only requires 6gb of vram which is significantly less than AI video generation uses. It’s using existing models with some tweaks which means the process can be applied to other existing models. This is going to change a lot for local and even online video generation. It’s what I used to call a catalyst event in AI before we became saturated with catalyst events. Every other week saw a new catalyst event, so I stopped using the term, until now, because firstly it has been awhile since we had one, and secondly because this qualifies significantly as one.

If you’d like to check it out, you can find it here https://github.com/lllyasviel/FramePack.git

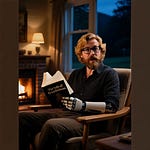

The developer that is working on this is responsible for a lot of innovations in the open source AI field/community. It’s pretty easy to install, it does need a bit of space (50gb download for the models), and it works on most modern gaming GPU’s (6gb of vram) and the quality is pretty decent, the cohesion is extremely good. It requires a single image and a prompt to generate a video.

In any case, since I gained more followers, figured I’d update you guys on my current project. Also working on Text to Speech AI systems still, my wife’s AI writing assistant, and my sons learning UI among other things.